In this digital age, children are exposed to overwhelming amounts of information online, some of it unverified and increasingly generated by non-human sources, such as AI-driven language models. As children grow older, the ability to assess a source’s reliability is an important skill in cultivating critical thinking.

Children aged 3 to 5 years display selective trust based on the informant’s past accuracy when faced with both humans and robots, according to a study published in the journal Child Development titled, “Younger, not older, children trust an inaccurate human informant more than an inaccurate robot informant.”

“Children do not just trust anyone to teach them labels, they trust those who were reliable in the past. We believe that this selectivity in social learning reflects young children’s emerging understanding of what makes a good (reliable) source of information,” explained Li Xiaoqian, a research scholar at Singapore University of Technology and Design (SUTD) who co-authored the study with her Ph.D. supervisor Professor Yow Wei Quin, a psychology professor and head of Humanities Arts and Social Sciences cluster at SUTD. “The question at stake is how young children use their intelligence to decide when to learn and whom to trust.”

In the study, participants from Singapore preschools such as ChildFirst, Red SchoolHouse and Safari House, aged between 3 and 5, were split below and above the median age of 4.58 years old into “younger” and “older” cohorts respectively.

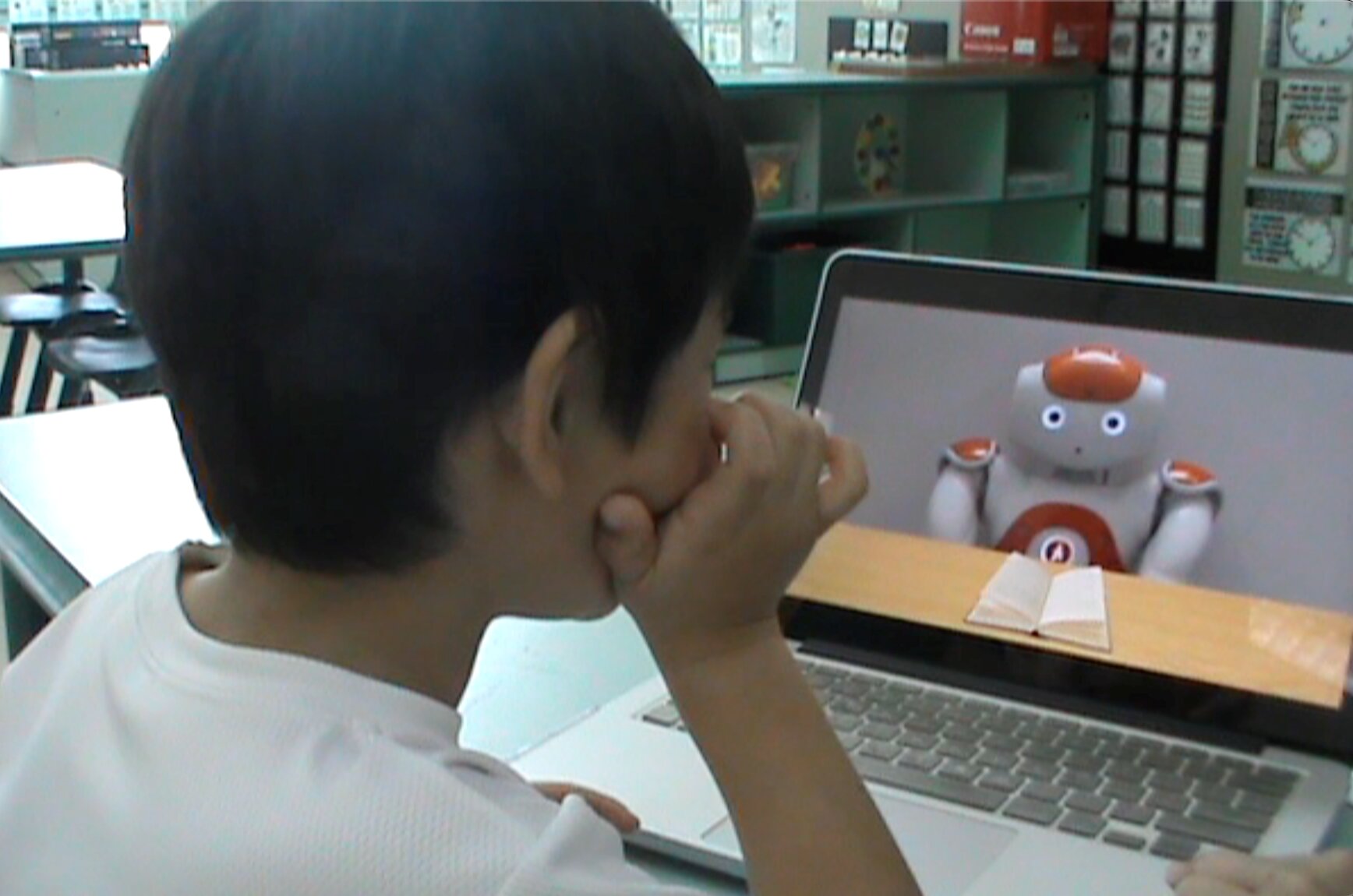

They were paired with a robot or human informant, which either provided accurate or inaccurate labels to objects, such as “ball” or “book.” The researchers then tested to see if the informant’s identity (human or robot) and track record as a reliable informant as well as the child’s age influenced the child’s trust in the informant to label things correctly in the future.

Participants were presented with only one informant during the study, and their trust was measured by their willingness to accept new information. The humanoid social robot by SoftBank Robotics, NAO, which has a human-like but robotic voice, was used as the robot informant.

To keep conditions comparable, the human informant matched her movements to those of the robot. An experimenter was also seated next to the participant to ask the necessary questions, so that the participant would not feel pressured to agree with the informant.

The study revealed that children were willing to accept new information from both human and robot informants who had previously given accurate information, but not from a potentially unreliable informant who had made mistakes in the past—especially when the informant was a robot. As for the age effect, the authors reported that younger children were likelier to accept information from an unreliable human than an unreliable robot, but older children were found to distrust or reject information from an unreliable informant, human or robot.

“These results implicate that younger and older children may have different selective trust strategies, especially the way they use informants’ reliability and identity cues when deciding who to trust. Together with other research on children’s selective trust, we show that as children get older, they may increasingly rely on reliability cues to guide their trust behavior,” said Dr. Li.

Previous research has shown that children rely on factors such as age, familiarity, and language to figure out whether an informant is reliable or not. It may be that younger children rely on identity cues like these more than they do epistemic evidence. As they get older, children place more emphasis on “what you know” than “who you are” when deciding to trust an informant.

This is the first study to ask the questions: (1) Do children draw different inferences about robots with varying track records of accuracy? and (2) Are these inferences comparable to those about humans?

“Addressing these questions will provide a unique perspective on the development of trust and social learning among children who are growing up alongside various sources of information, including social robots,” said Prof Yow.

This research has significant implications for pedagogy, where robots and non-human educational tools are increasingly integrated into the classroom. Children today may not perceive robots as trustworthy as humans if they have not interacted much with robots. However, as children gain more exposure to smart machines, they could be inclined to see robots as intelligent and reliable sources of knowledge.

Future studies could explore the selective learning development theory beyond the scope of word learning, such as tool usage, emotional expression congruency, or episodic domains such as location learning. For now, the researchers hope that their findings are considered in the realm of design pedagogy.

“Designers should consider the impact of perceived competence when building robots and other AI-driven educational tools for young children. Recognizing the developmental changes in children’s trust of humans versus robots can guide the creation of more effective learning environments, ensuring that the use of technologies aligns with children’s developing cognitive and social needs,” said Prof Yow.

More information:

Xiaoqian Li et al, Younger, not older, children trust an inaccurate human informant more than an inaccurate robot informant, Child Development (2023). DOI: 10.1111/cdev.14048

Provided by

Singapore University of Technology and Design

Citation:

Robots vs. humans: Which do children trust more when learning new information? (2023, December 22)

retrieved 22 December 2023

from https://phys.org/news/2023-12-robots-humans-children.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.