Recent advancements in agricultural computer vision have heavily relied on deep learning models, which, despite their success in general tasks, often lack agricultural-specific fine-tuning. This results in increased training time, resource use, and lower performance due to the reliance on weights from non-agricultural datasets.

Though transfer learning has proven effective in mitigating data gaps, the current research emphasizes the inadequacy of existing pre-trained models in capturing agricultural relevance and the absence of a substantial, agriculture-specific dataset. Challenges include insufficient task-specific data and uncertainties regarding the efficacy of data augmentation in agricultural contexts.

To tackle these issues, exploring alternative pre-trained model strategies and establishing a centralized agricultural dataset is imperative to enhance data efficiency and bolster model performance in agriculture-specific tasks.

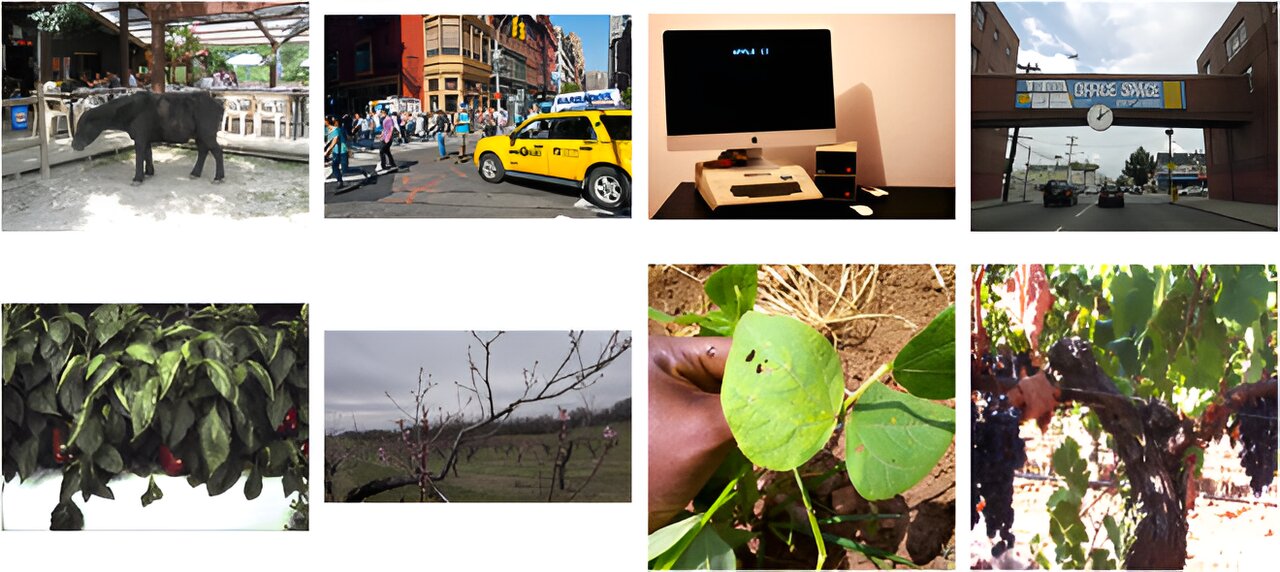

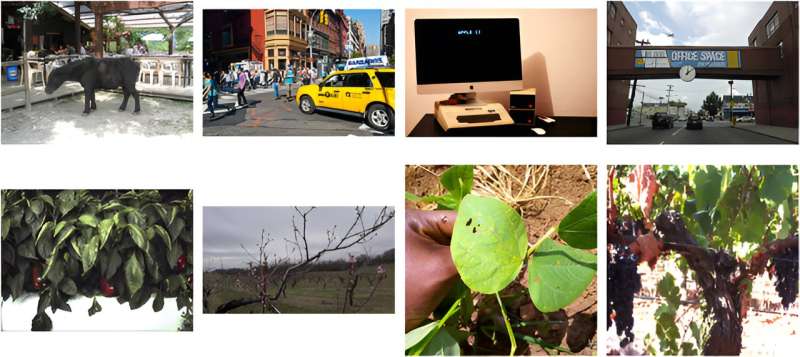

In a study published in Plant Phenomics, the researchers created a novel framework for agricultural deep learning by standardizing a wide range of public datasets for three distinct tasks and constructing benchmarks and pre-trained models.

They employed commonly used deep learning methods, yet unexplored in agriculture, to enhance data efficiency and model performance without major alterations to existing pipelines. The research showcased that standard benchmarks enable models to perform comparably or better than existing benchmarks, with these resources made available through AgML (github.com/Project-AgML/AgML).

For object detection, agricultural pre-trained weights substantially outperformed standard baselines, achieving quicker convergence and higher precision, especially for certain fruits. Similarly, in semantic segmentation, models with agricultural pretrained backbones outperformed those with general backbones, indicating swift performance improvements.

These findings underscore that even subtle adjustments to training processes can significantly enhance agricultural deep learning tasks. The study also delved into the efficacy of data augmentations, revealing that spatial augmentations outperformed visual ones, suggesting their potential to enhance model generalizability and performance in diverse conditions.

However, the impact varied across tasks and conditions, highlighting the nuanced nature of augmentation application. Additionally, researchers explored the effects of annotation quality, revealing that models could still perform well even with lower-quality annotations, suggesting a potential for broader data use and annotation strategies.

In summary, this work not only advances the field of agricultural deep learning through a novel set of standardized datasets, benchmarks, and pretrained models but also provides a practical guide for future research. By demonstrating that minor training adjustments can lead to significant improvements, pathways have been opened for more efficient and effective agricultural deep learning, ultimately contributing to the broader goal of advancing agricultural technology and productivity.

More information:

Amogh Joshi et al, Standardizing and Centralizing Datasets for Efficient Training of Agricultural Deep Learning Models, Plant Phenomics (2023). DOI: 10.34133/plantphenomics.0084

Citation:

Enhancing model performance and data efficiency through standardization and centralization (2023, December 29)

retrieved 30 December 2023

from https://phys.org/news/2023-12-efficiency-standardization-centralization.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

56

40

93

59

00

25

56

55

62

18

34

92

64

11

23

65

23

87

71

23

08

45

70

45

57

16

21

43

78

97

88

50

90

62

52

61

46

90

46

87

55

72

50

18

32

72

15

05

65

02

63

53

66

97

92

42

34

43

62

81

10

29

70

97

46

90

92

41

01

71

38

59

31

56

07

77

56

87

88

16

08

61

45

05

73

42

84

28

19

71

35

89

40

72

54

14

91

46

95

94

96

96

69

33

11

39

64

90

40

27

33

68

38

01

96

52

16

28

93

19

84

64

31

01

09

96

45

66

80

15

32

87

51

58

45

84

68

27

10

47

88

00

83

59

79

10

94

63

09

32

88

96

56

75

93

50

93

58

26

93

58

90

43

21

91

74

42

76

26

88

94

05

89

47

90

54

52

66

85

80

02

86

52

60

64

43

05

85

59

36

07

73

85

92

89

85

47

35

22

12

24

58

61

49

19

39

06

81

15

95

47

49

25

18

44

40

33

20

18

53

91

02

92

20

40

02

77

75

05

72

32

68

65

27

10

96

09

49

59

71

33

16

97

77

88

32

80

07

41

22

21

65

18

03

50

45

18

16

85

35

56

98

73

48

32

10

62

17

31

65

12

98

88

37

61

86

10

47

86

80

06

55

88

58

28

91

71

84

34

64

36

19

84

33

54

56

00

81

01

92

22

66

69

67

80

56

32

95

45

12

18

59

95

46

93

63

14

56

24

03

06

54

32

83

08

72

57

18

63

47

65

55

25

52

15

38

72

10

37

21

34

67

96

13

78

91

75

74

46

23

26

07

80

38

46

34

88

41

85

58

24

19

99

80

50

86

92

92

70

96

67

03

93

37

72

21

72

34

62

56

79

06

49

05

50

66

84

56

22

95

45

56

35

88

65

95

36

28

47

55

37

26

85

82

28

50

28

34

39

94

46

56

91

97

73

62

88

87

88

75

37

00

70

48

98

43

23

28

27

74

82

56

52

48

01

66

45

39

24

10

55

00

66

56

47

70

90

20

47

95

08

53

52

63

16

47

94

25

18

65

03

53

97

85

88

66

56

42

98

07

00

19

02

87

24

79

12

76

93

46

98

10

84

91

36

72

96

14

23

00

69

28

18

34

19

80

30

53

03

63

25

55

82

24

69

96

95

94

56

40

19

12

23

48

94

14

19

20

81

51

02

53

35

30

77

54

44

05

33

36

84

97

83

88

78

86

19

85

89

19

99

23

33

01

18

64

59

05

51

88

36

93

30

55

89

45

95

65

71

14

20

15

33

09

18

15

33

95

76

83

84

08

05

40

19

43

27

73

41

99

84

15

11

06

04

78

W3v!oi5PEoK6gpC7cmIrq!&token=mS6ibeG9AS1DRMGKAQ8Xlx5rHCUaXdr6WxqCs99fDNXUjCz60cFBN31a1KivGcpj1JXCsbtCls9DQyJoXZxT5GFkF3BOj1a2GuCL7Wq1egIZsvRH&ct=application%2Fpdf&at=0″>1

2

3

4

5

1

2

3

4

5

1

2

3